NVIDIA Docker 是一个github上的开源项目,主要提供了一下两个组件,nvidia-docker基本上是docker命令的一个包装,透明地为容器提供必要的组件以在GPU上执行代码。

1.driver-agnostic CUDA images;

2.a Docker command line wrapper that mounts the user mode components of the driver and the GPUs (character devices) into the container at launch.

一、安装Docker和NVIDIA Docker

创建容器化GPU APP之前需要先安装下面的软件

- 最新的NVIDIA驱动

- Docker

- ‘nvidia-docker’

驱动和Docker之前都安装过,参见install Tensorflow和install and learn docker,现在安装nvidia-docker。

¶1.安装nvidia-docker

这里只看ubuntu16.04的效果,其他版本请参考Github上的安装步骤

安装nivdia-docker之前也需要安装nvidia驱动和Docker

# If you have nvidia-docker 1.0 installed: we need to remove it and all existing GPU containers

docker volume ls -q -f driver=nvidia-docker | xargs -r -I{} -n1 docker ps -q -a -f volume={} | xargs -r docker rm -f

sudo apt-get purge -y nvidia-docker

# Add the package repositories

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | \

sudo apt-key add -

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | \

sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt-get update

# Install nvidia-docker2 and reload the Docker daemon configuration

sudo apt-get install -y nvidia-docker2

sudo pkill -SIGHUP dockerd

# Test nvidia-smi with the latest official CUDA image

docker run --runtime=nvidia --rm nvidia/cuda nvidia-smi

按照以上步骤进行安装没有遇到问题。

运行以下代码,检测一下

nvidia-docker run --rm hello-world

二、搭建容器化GPU程序

¶1.开发环境

从docker hub上拉去cuba镜像

nvidia-docker pull nvidia/cuda

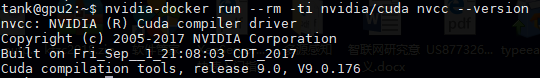

查看版本

nvidia-docker run --rm -ti nvidia/cuda nvcc --version

可以使用以下命令查看镜像,exit退出

nvidia-docker run --rm -ti nvidia/cuda bash

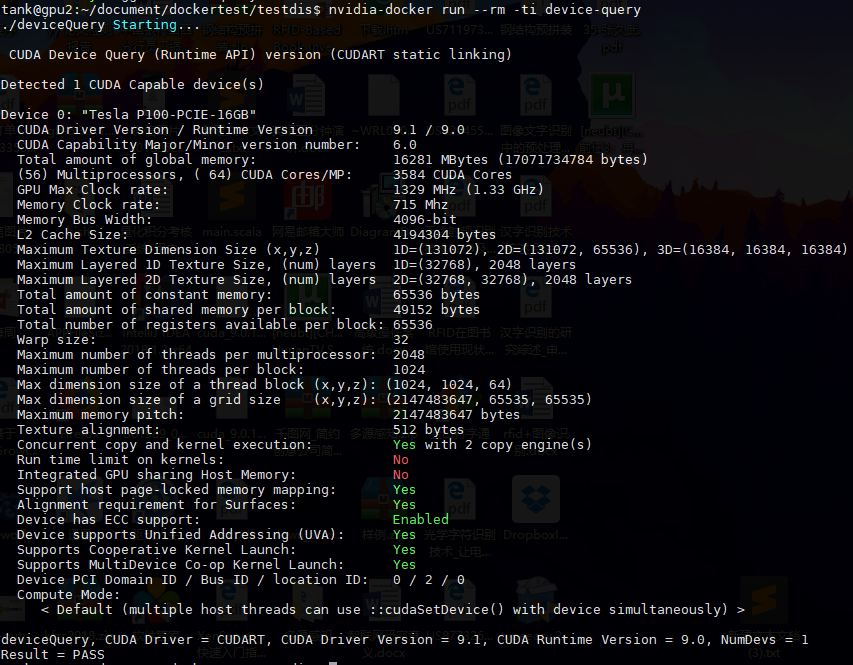

¶2.运行一个容器化CUDA app

Dockerfile 内容如下:

# FROM defines the base image

FROM nvidia/cuda

# RUN executes a shell command

# You can chain multiple commands together with &&

# A \ is used to split long lines to help with readability

# This particular instruction installs the source files

# for deviceQuery by installing the CUDA samples via apt

RUN apt-get update && apt-get install -y --no-install-recommends \

cuda-samples-$CUDA_PKG_VERSION && \

rm -rf /var/lib/apt/lists/*

# set the working directory

WORKDIR /usr/local/cuda/samples/1_Utilities/deviceQuery

RUN make

# CMD defines the default command to be run in the container

# CMD is overridden by supplying a command + arguments to

# `docker run`, e.g. `nvcc --version` or `bash`

CMD ./deviceQuery

编辑完Dockefile之后运行以下命令

nvidia-docker build -t device-query .

build完之后运行

nvidia-docker run --rm -ti device-query

默认的docker会映射所有的NVIDIA GPU到容器,也可以控制使用哪一个容器

NV_GPU=1 nvidia-docker run --rm -ti device-query

¶3.部署一个GPU容器

打标记

nvidia-docker tag device-query dockerkotar/device-query

上传到Docker Hub(要先登陆,参考share image)

nvidia-docker push dockerkotar/device-query

上传完之后运行以下命令可以得到和之前相同的结果

nvidia-docker run --rm -ti dockerkotar/device-query

¶4.Docker for Deep Learning and HPC

nvidia-docker run --name digits --rm -ti -p 8000:34448 nvidia/digits

这个命令运行 nVidia/digits,并将容器的34448端口映射到8000端口

部署过程出现错误

libdc1394 error: Failed to initialize libdc1394

解决方法,在运行时添加-v /dev/null:/dev/raw1394

nvidia-docker run --name digits --rm -ti -p 8000:34448 -v /dev/null:/dev/raw1394 nvidia/digits

解决了这个错误,但是还有这个警告,而且不能正确运行。

warnings.warn('Matplotlib is building the font cache using fc-list. This may take a moment.')

2018-06-14 08:43:17 [INFO ] Loaded 0 jobs.

待解决: